Types of Evaluation: Focus, Measures, Application

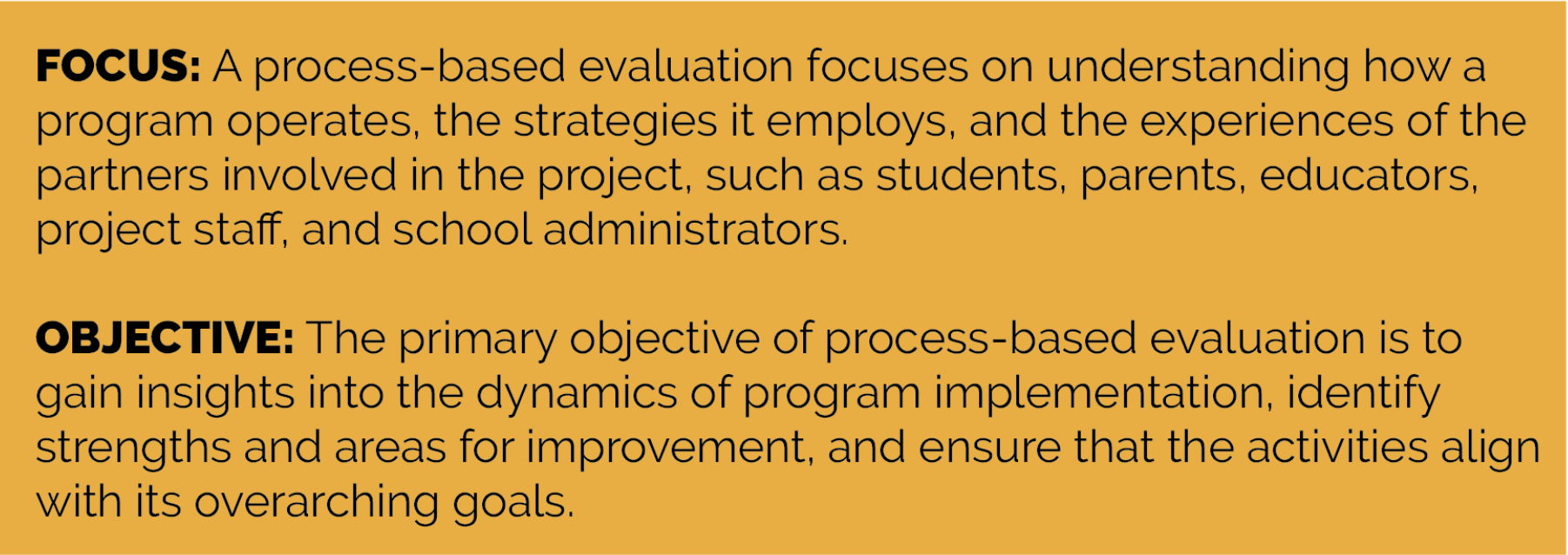

Entry 2: Process-Based Evaluation

This entry is a continuation of our previous discussion about the types of evaluation (see blog entry 1). It will outline a step-by-step approach to conducting a process-based evaluation in a simple and understandable way. Let's dive into understanding what process evaluation entails.

The starting point of any evaluation is to clarify what you want to achieve through your evaluation and what specific changes or improvements you hope to make in the process of implementing your program. For the process evaluation we would need to:

- Consider the key areas of focus, such as program fidelity, participant engagement, resource utilization, or program adaptations.

- Focus on outcomes that are directly related to the process aspects of program delivery, such as increased adherence to the program plan, enhanced participant engagement, or improved resource allocation.

Setting clear goals and objectives for your process evaluation helps create a roadmap to see how well your program is doing and figure out what changes are needed for it to succeed. Let's dive into understanding what process evaluation entails by using an illustrative example.

Developing Process-Related Evaluation Questions

Let's talk about developing process-related evaluation questions. These questions should concentrate on the components and structure of the program. Here are a few examples of the questions framed within the key focus areas:

Implementation Fidelity: To what extent are the program activities being carried out according to the designed plan? We would want to assess if the activities were offered according to the plan, how closely the program staff followed the schedules, and how the information about the program was distributed to the target audience.

Participant Engagement: How actively are the participants involved in the program activities? Here we want to see if the program meets the expected numbers of the parents participating in the program as well as their level of interest and satisfaction with the activities offered.

Resource Utilization: Are the allocated resources (such as materials, time, and personnel) being utilized effectively to support program delivery? We are interested in how efficiently the facilitators use time while conducting targeted activities, whether timing affects participation, or if there are any staffing issues that impact the program delivery.

Adaptations: Have there been any modifications or adaptations made to the program content or delivery methods? If so, what are they and why were they made? When we find out if there are any problems with the program implementations (consistency, timing, outreach, parent engagement or timing) we should recommend possible modifications or adjustments to improve the implementation of the program. For example, you might suggest changing the timing or duration of the program based on parents’ feedback or modifying the format of certain activities by targeting different groups based on their level of competency in the college-going process.

Collecting Data

Once we have our questions in place, it's important to align our data collection methods to gather relevant information to help us address the process questions. Here's how we can do that:

Attendance Records: Collect sign-in sheets or other participation records to see if there are any trends in participation based on the dates or timing of the activities.

Surveys and Questionnaires: Create and administer surveys for participants (parents, program staff, facilitators, presenters, etc.) to find out what they think about various activities and if they faced any hurdles. Ask specific questions to gouge their satisfaction level and ask for their suggestions on how to better meet their needs.

Observations: Conduct direct observations of program activities (workshops, presentations, campus visits) to assess the fidelity of program implementation and participant engagement. It would be helpful to have observation checklists and take field notes to record your comments.

Interviews: Schedule interviews with program staff and participants to delve deeper into their experiences, perceptions, and any adaptations made to the program. By aligning our data collection methods with our evaluation questions, we can gather comprehensive information to assess the processes involved in implementing our program. This will ultimately help us make informed decisions to improve program delivery and effectiveness.

Analyzing Data

Let's discuss how we analyze the data collected from our process evaluation.

Quantitative Data Analysis: If we gather quantitative data, such as attendance records or scores from program evaluation surveys, we can start by organizing this data into charts, graphs, or tables to visualize trends and patterns. For example, we can calculate average attendance rates across different program activities or examine changes in participant satisfaction scores over time.

Qualitative Data Analysis: If we collect qualitative data through interviews or observations, we can use thematic analysis to identify recurring themes, patterns, and insights. For example, we might identify common barriers to program implementation or strategies to facilitate engagement.

Triangulation: This involves cross-referencing survey results with qualitative insights and narratives to get a better picture of how the program is working.

Interpreting Finding and Developing Recommendations

Based on the analysis, interpret the findings to draw conclusions about the effectiveness of the program activities. It is important to identify strengths and weaknesses and make recommendations for improvement. This could include adjustments to certain activities, changes in delivery methods, or additional support for parents in the most needed areas. On-going adjustments based on the analysis of the program implementation are imperative for its success.

Reporting

Finally, communicate the findings of your evaluation in a clear and concise manner. This could be done through a written report, presentation, or infographic. Use plain language and avoid jargon to make the findings understandable to your audience. This could include sharing preliminary findings with program staff, parents, and administrators to gather their perspectives, validate your interpretations, and identify any additional insights or recommendations.

In conclusion, conducting a process-based evaluation involves defining objectives, developing process-related evaluation questions, collecting and analyzing data, interpreting findings, and reporting results. By following these steps, all partners of your program can gain valuable insights into its implementation and make informed decisions on how to improve the program’s effectiveness.

You can check the following resources to get more helpful information and tools for your evaluation practices:

https://www.evalcommunity.com/career-center/best-practices-for-successful-monitoring-and-evaluation-process/

https://www.mentoring.org/resource/getting-started-with-program-evaluation-2-planning-a-process-evaluation/

Contributed By Nadia Kardash

Nadzeya (Nadia) Kardash, Ph.D., is an Associate Researcher with the Research, Evaluation & Dissemination Department in the Center for Educational Opportunity Programs. She currently conducts research and evaluation of the CEOP’s federally funded college access programs including GEAR UP, TRIO, and other college access programs.

Follow @CEOPmedia on Twitter to learn more about how our Research, Evaluation, and Dissemination team leverages data and strategic dissemination to improve program outcomes while improving the visibility of college access programs.