Don’t Just Be a Bench(mark) Warmer

Don’t Just Be a Bench(mark) Warmer

Although at first, it may feel like just another set of boxes to check, a well-advised system of benchmarks will help your entire team stay connected and work toward the same goals. Benchmarks should provide regular reminders of why you provide the services you provide. They should also help you prioritize some activities and services over others, which can simplify decision-making and the allocation of limited resources.

If everyone uses the same set of benchmarks to guide their daily work, it creates consistency for the entire staff and helps them prioritize the activities that matter the most to the program as a whole. As Kimberly Morgan from KU GEAR UP Diploma+ said, “I know I can lead my team to success once I know what success actually is.”

.png)

How to Create Benchmarks

Benchmarks should link your everyday activities and services with the higher-level objectives specified in your grant. The simplest and most effective way to create benchmarks for your program is to reference your logic model because benchmarks should represent actionable steps that your team can take to achieve the high-level goals and long-term commitments of your program. If you do not have a solid logic model for your program, I recommend that you create that first and then return to the process of setting benchmarks.

When it comes to creating benchmarks, especially the very first time you try, don’t let the perfect be the enemy of the good. Sometimes there just is not enough information to craft a well-informed benchmark for a specific activity. However, creating and measuring progress toward a benchmark this year means that you will definitely have more information to help you refine that benchmark next year.

To create benchmarks, you set a goal for each of the activities within your logic model. Each benchmark should be SMART – that is, specific, measurable, achievable, relevant, and time-bound. The details of benchmarks should be based upon 1. prior data (e.g., how many seniors participated in the workshop last year?), 2. program capacity (e.g., do we have the same number of staff members available to run the sessions?), and 3. relative prioritization with other activities (e.g., which is more important and impactful to our program – taking students to visit college campuses or helping them complete their FAFSA?).

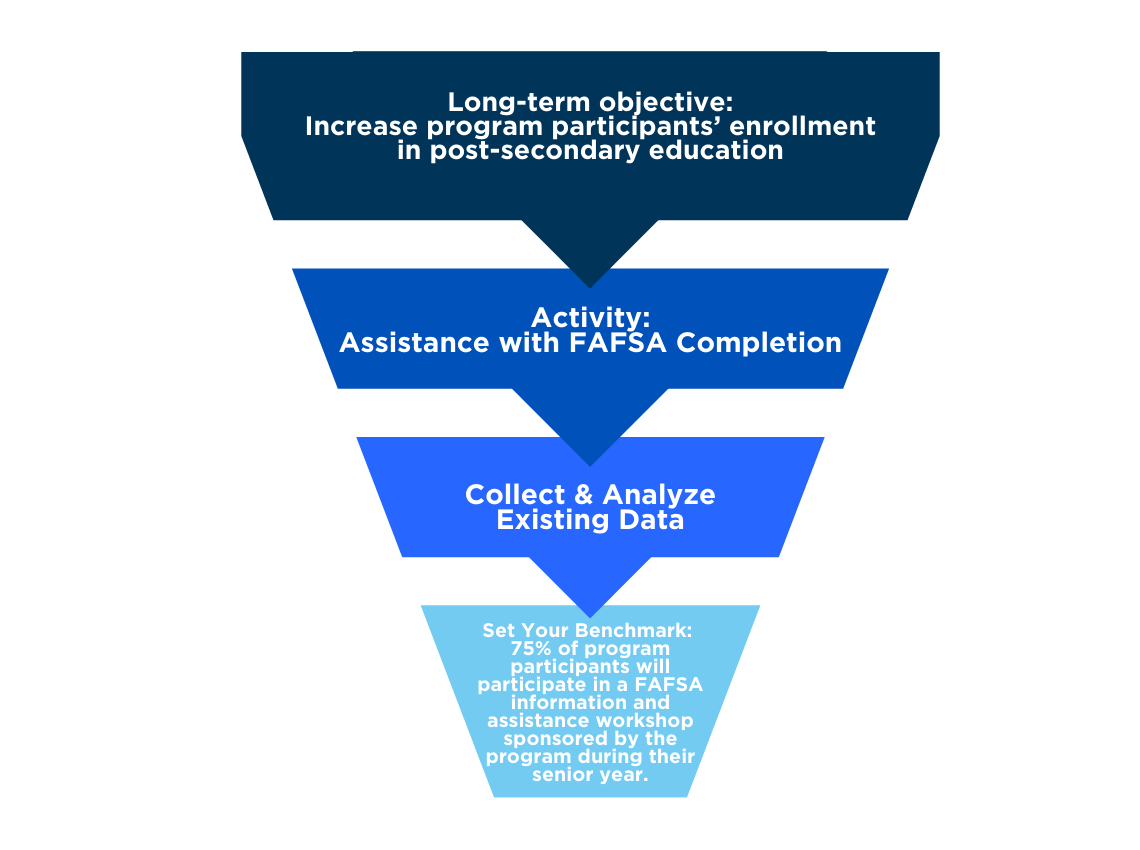

Here is an example of how one college access program created a benchmark for one of its key program activities, the FAFSA workshop.

The long-term objective from the logic model: Increase program participants’ enrollment in post-secondary education.

Activity (one of several) linked to that objective from the logic model: Assistance with FAFSA completion.

The program staff knew that the previous year, about 2/3 of their participants who were then seniors in high school attended a FAFSA information and assistance workshop [#1, prior data]. They also knew from informal feedback from returning staff that while the participants were very satisfied with the workshop, some Spanish-speaking families had not attended the workshop because it was only offered in English. [That was also #1, prior data; Even though it was not formally collected, that was still useful for creating benchmarks.] This year, however, the program had already hired two additional program coordinators who both spoke Spanish [#2, program capacity]. Finally, FAFSA completion is and probably always will be a high priority for college access programs [#3].

Based on this information, the program staff created the following benchmark: 75% of program participants will participate in a FAFSA information and assistance workshop sponsored by the program during their senior year.

How To Use Benchmarks

Progress toward benchmarks should be measured regularly and shared with team members on a regular basis (or they should be trained to track the progress themselves).

It is critical for the entire team to understand what benchmarks are and what they are not. Benchmarks like these should be formative, not summative. Program staff should not be made to worry that they will be punished or lose their jobs if they do not reach their benchmarks.

For example, perhaps a certain benchmark has been difficult to reach because the staff member has not been able to find the correct individuals to help them schedule sessions of a specific program, or perhaps the staff member is having trouble recruiting participants to attend the program. They should be encouraged to ask other staff members how they might have solved that problem in the past. Maybe one person is struggling with one benchmark but surpassing another and someone else is in the opposite situation – they should feel comfortable sharing ideas with one another. Perhaps it turns out that the benchmark is unattainable to everyone, which likely indicates that the benchmark should be re-evaluated the next year. That is not a failure, but rather a very useful data point!

By drafting benchmarks and keeping an eye on your progress toward them, you and your team will find yourselves more connected with the mission of your program and with each other as you work to realize that mission. You might be surprised with what you learn and where it leads you, but you won’t know until you try!

![]()

Contributed By Joanna Full

Joanna Full is an Associate Researcher for the Research, Evaluation & Dissemination Department at the Center for Educational Opportunity Programs. She currently manages the data collection processes and conducts the formative and summative evaluations of several of CEOP’s federally funded college access programs, including GEAR UP and Talent Search.

Follow @CEOPmedia on Twitter to learn more about how our Research, Evaluation, and Dissemination team leverages data and strategic dissemination to improve program outcomes while improving the visibility of college access programs.